Hoffman2:Introduction: Difference between revisions

No edit summary |

|||

| (52 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

[[Hoffman2|Back to all things Hoffman2]] | |||

==What is Hoffman2?== | |||

The Hoffman2 Cluster is a campus computing resource at UCLA and is named for Paul Hoffman (1947-2003). It is maintained by the [https://idre.ucla.edu/ IDRE] at UCLA and the main official webage is [http://www.hoffman2.idre.ucla.edu/ here]. With many high end processor, data storage, and backup technologies, it is a useful tool for executing research computations especially when working with large datasets. More than 1000 users are currently registered and the cluster sees tremendous usage. Click [[Hoffman2:Getting an Account|here]] to find out how to join. In September 2014 alone, there were more than 5.5 million compute hours logged. See more usage statistics [https://www.hoffman2.idre.ucla.edu/status/ here]. | |||

=What | ==Anatomy of the Computing Cluster== | ||

What does Hoffman2 consist of? | |||

* Login Nodes | |||

* Computing Nodes | |||

* Storage Space | |||

* Univa Grid Engine | |||

[[File:CCN_Hoffman2_Workshop.jpg]] | |||

<br/> | |||

''**Image taken from a previous ATS "Using Hoffman2 Cluster" slide deck**'' | |||

===Login Nodes=== | ===Login Nodes=== | ||

There are four login nodes which allow you to access and interact with the Hoffman2 Cluster. These are essentially four dedicated computers that you can SSH into and use to look at your files or submit computing jobs to the queue (more on what the queue is in a bit). It is important to remember that these are four computers being shared by ALL the Hoffman2 users. Doing ANY type of computing on these nodes is frowned upon. If you are: | There are four login nodes which allow you to access and interact with the Hoffman2 Cluster. These are essentially four dedicated computers that you can [[Hoffman2:Accessing the Cluster#SSH|SSH]] into and use to look at and edit your files or submit computing jobs to the queue (more on what the queue is in a bit). It is important to remember that these are four computers being shared by ALL the Hoffman2 users. Doing ANY type of heavy computing on these nodes is frowned upon. If you are: | ||

*moving | *moving lots of files | ||

*calculating the inverse solution to an EEG signal, or | *calculating the inverse solution to an EEG signal, or | ||

*running a bunch of python | *running a bunch of python scripts to extract tractography of a brain | ||

You should NOT be doing this on a login node. If the sysadmins at ATS find any process that is taking up too many resources on the login nodes, they reserve the right to terminate the process immediately. | |||

===Computing Nodes=== | ===Computing Nodes=== | ||

As of April 2014, Hoffman2 is made up of more than 12,000 processors across three data centers and this number continues to grow as the cluster is expanded. The individual cores of the processors are where your programs gets executed when you submit a job to the cluster. There are ways to request different amount of resources, such as how much RAM or CPU cores your program/job needs. | |||

There is also a GPU cluster that has more than 300 nodes, but this is | There is also a GPU cluster that has more than 300 nodes, but access to this must be requested separately from a normal Hoffman2 account. For more information, go [http://www.hoffman2.idre.ucla.edu/computing/gpuq/ here] | ||

The reason the number of computing cores continues to grow is because more resource groups (like individual research labs) join Hoffman2 and buy nodes to be integrated into the cluster. Nodes contributed by a resource group are guaranteed to that resource group and can be used to run longer jobs ([http://www.hoffman2.idre.ucla.edu/computing/policies/ up to 14 days]). As of June 2013, the Cohen and Bookheimer groups on Hoffman2 have 96 cores: | |||

* 6 nodes (installed pre 2010) each with | |||

** 8 cores | |||

** 8GB RAM | |||

* 3 nodes (installed Fall 2012) each with | |||

** 16 cores | |||

** 48GB RAM | |||

Use the command <code>mygroup</code> to see what resources you have available. | |||

===Storage Space=== | ===Storage Space=== | ||

====Home | For official and up-to-date information about storage space, [http://www.hoffman2.idre.ucla.edu/data-storage/ click here]. If you want a quick overview, see below. | ||

When you login to Hoffman2, you get dropped into your home directory immediately. | |||

====Long Term Storage==== | |||

IDRE maintains high end storage systems (BlueArc and Panasas) for Hoffman2 disk space. There have built in redundancies and are fault tolerant. Redundant Backups are also available. | |||

If all of that sounded Greek to you, the important thing to understand is that there is a lot of disk space on Hoffman2 and IDRE takes great pains to make sure your data is safe. If you are paranoid, there is alternative backups. | |||

=====Home Directories===== | |||

:When you login to Hoffman2, you get dropped into your home directory immediately. Home directory locations follow the pattern | |||

::<pre>/u/home/[u]/[username]</pre> | |||

:Where <code>[u]</code> is the first letter of the username, e.g. | |||

::<code>/u/home/j/jbruin # Common home directory</code> | |||

::<code> /u/home/t/ttrojan</code> | |||

:Your home directory is where you can keep your personal files (papers, correspondences, notes, etc.) and files you frequently change (source code, configuration files, job command files). '''It is not the place for your large datasets for computing.''' Data in your home directory is accessible from all login and computing nodes. | |||

:Every user is allowed to store up to 20GB of data files in their home directory. If you are part of a cluster contributing group, you can also store data files in that group's common space described in the next section... | |||

:[[Hoffman2:Quotas|Find out how much space your group is using on Hoffman2.]] | |||

=====Group Directory===== | |||

:Group directories are given to groups that purchase extended storage space (in 1TB/1million file increments for three year periods, as of Summer 2013). This is common space designed for collaboration and is where your datasets should mainly be stored. Individual users are given directories under the main group directory to help organize data ownership. For example: | |||

::<pre>/u/project/mscohen # Common group directory</pre> | |||

::<pre>/u/project/mscohen/data # Common group "data" directory, create subdirectories for specific shared projects</pre> | |||

::<pre>/u/project/mscohen/aaronab # mscohen group directory for the user aaronab, different from their /u/home/a/aaronab home directory</pre> | |||

::<pre>/u/project/mscohen/kerr # mscohen group directory for the user kerr, different from their /u/home/k/kerr home directory</pre> | |||

:and these directories are accessible from all login and computing nodes. | |||

:'''These directories have limits to how many files can be put in them and how large those files can be.''' | |||

:*When a group buys in for 1TB/1million files, their quota is considered met when they have EITHER | |||

:** 1TB worth of files, OR | |||

:** 1 million files | |||

:*When a group buys in for 4TB/4million files, their quota is considered met when they have EITHER | |||

:** 4TB worth of files, OR | |||

:** 4 million files | |||

:'''Once a group's quota has been reached, everyone in that group is immediately prevented from creating any more files in the group directory automatically.''' This means any computing jobs you are running may fail due to an inability to write out their results. You may also have trouble starting GUI sessions due to an inability to create temporary files. | |||

:Read about how to monitor your disk quota [[Hoffman2:Quotas|here]]. | |||

====Temporary Storage==== | ====Temporary Storage==== | ||

When running a computing job on Hoffman2, reading and writing a bunch of files in your home directory can be slow. So faster temporary storage is available to use for ongoing jobs | When running a computing job on Hoffman2, reading and writing a bunch of files in your home directory can be slow. So faster temporary storage is available to use for ongoing jobs. Read the official description [http://www.hoffman2.idre.ucla.edu/data-storage/ here]. | ||

===== | <!-- | ||

Each computing node has its own unique "work" directory. This is only accessible by jobs on that specific node. | =====work===== | ||

:'''/work''' | |||

:Each computing node has its own unique "work" directory. This is only accessible by jobs on that specific node. '''Files in /work more than 24 hours old become eligible for automatic deletion.''' There is at least 200GB of this space on each node, but you may only use a portion proportional to the number of cores you are using on that node (you have to share). | |||

:Every job is given a unique subdirectory on ''work'' where it can read and write files rapidly. The [[Hoffman2:UNIX Tutorial#Environment Variables|UNIX environment variable]] <code>$TMPDIR</code> points to this directory. | |||

:If your job reads from or writes to a file repeatedly, you may save time by keeping that file in this temporary directory and then moving it to your home directory at job completion so it is not deleted. | |||

--> | |||

=====scratch===== | |||

:'''/u/scratch/[u]/[username]''' | |||

:Where ''[username]'' is replaced with your Hoffman2 username and ''[u]'' is replaced with the first letter of your username. Data here is accessible on all login and computing nodes. You can use up to 2TB of space here, but '''Any files older than 7 days may be automatically deleted by the system'''. Use the [[Hoffman2:UNIX Tutorial#Environment Variables|UNIX environment variable]] <code>$SCRATCH</code> to reliably access your personal scratch directory. | |||

====Cold Storage==== | |||

Cold storage is the idea of storing things away for a long time. Disk space on hoffman2 can be very expensive. | |||

IDRE has a cloud archival storage service [https://idre.ucla.edu/cass/ here] | |||

===Univa Grid Engine=== | |||

The Univa Grid Engine is the brains behind how jobs get executed on the cluster. When you request that a script be run on Hoffman2, the UGE looks at the resources you requested (how much memory, how many computing cores, how many computing hours, etc) and puts your job in a queue (a waiting line for those not familiar with British English) based on your requirements. Less demanding jobs generally get front loaded while more demanding ones must wait for adequate resources to free up. The UGE tries to schedule jobs on computing nodes in order to make the most efficient use of the resources available. | |||

====Queues==== | |||

There is more than one queue on Hoffman2. Each is for a slightly different purpose: | |||

; interactive | |||

: For jobs requesting at most 24 hours of computing time and requiring the ability for users to interact with the program running. | |||

; highp | |||

: For jobs requesting at most 14 days of computing time. These are required to run on nodes owned by your group. | |||

And there are others. Read about them [http://www.hoffman2.idre.ucla.edu/computing/ here]. | |||

Find out how to submit computing jobs to the [[Hoffman2:Submitting Jobs| Hoffman2 Cluster.]] | |||

== | ==External Links / Notes== | ||

*[http://www.hoffman2.idre.ucla.edu/ Hoffman2 Webpage] | |||

*[https://www.hoffman2.idre.ucla.edu/About/Utilization-graph.html Hoffman2 Statistics] | |||

<!--*[http://www.hoffman2.idre.ucla.edu/data-storage Hoffman2 Data Storage] | |||

*[http://www.hoffman2.idre.ucla.edu/computing Hoffman2 Computing] --> | |||

*[[Hoffman2:Introduction-Historical_Notes | Historical Notes]] | |||

Latest revision as of 18:15, 16 February 2021

What is Hoffman2?

The Hoffman2 Cluster is a campus computing resource at UCLA and is named for Paul Hoffman (1947-2003). It is maintained by the IDRE at UCLA and the main official webage is here. With many high end processor, data storage, and backup technologies, it is a useful tool for executing research computations especially when working with large datasets. More than 1000 users are currently registered and the cluster sees tremendous usage. Click here to find out how to join. In September 2014 alone, there were more than 5.5 million compute hours logged. See more usage statistics here.

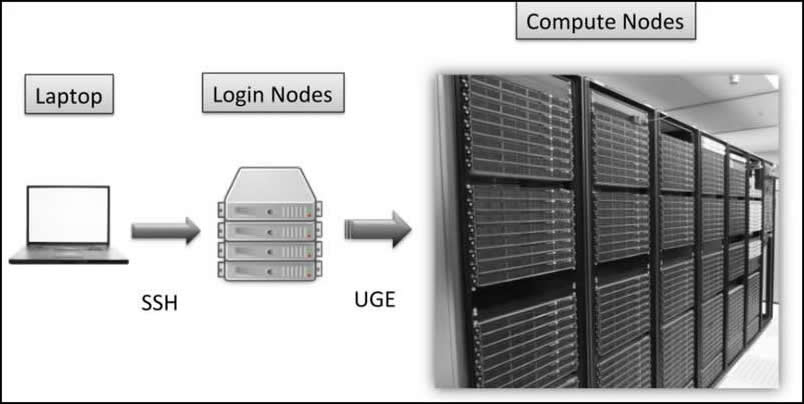

Anatomy of the Computing Cluster

What does Hoffman2 consist of?

- Login Nodes

- Computing Nodes

- Storage Space

- Univa Grid Engine

**Image taken from a previous ATS "Using Hoffman2 Cluster" slide deck**

Login Nodes

There are four login nodes which allow you to access and interact with the Hoffman2 Cluster. These are essentially four dedicated computers that you can SSH into and use to look at and edit your files or submit computing jobs to the queue (more on what the queue is in a bit). It is important to remember that these are four computers being shared by ALL the Hoffman2 users. Doing ANY type of heavy computing on these nodes is frowned upon. If you are:

- moving lots of files

- calculating the inverse solution to an EEG signal, or

- running a bunch of python scripts to extract tractography of a brain

You should NOT be doing this on a login node. If the sysadmins at ATS find any process that is taking up too many resources on the login nodes, they reserve the right to terminate the process immediately.

Computing Nodes

As of April 2014, Hoffman2 is made up of more than 12,000 processors across three data centers and this number continues to grow as the cluster is expanded. The individual cores of the processors are where your programs gets executed when you submit a job to the cluster. There are ways to request different amount of resources, such as how much RAM or CPU cores your program/job needs.

There is also a GPU cluster that has more than 300 nodes, but access to this must be requested separately from a normal Hoffman2 account. For more information, go here

The reason the number of computing cores continues to grow is because more resource groups (like individual research labs) join Hoffman2 and buy nodes to be integrated into the cluster. Nodes contributed by a resource group are guaranteed to that resource group and can be used to run longer jobs (up to 14 days). As of June 2013, the Cohen and Bookheimer groups on Hoffman2 have 96 cores:

- 6 nodes (installed pre 2010) each with

- 8 cores

- 8GB RAM

- 3 nodes (installed Fall 2012) each with

- 16 cores

- 48GB RAM

Use the command mygroup to see what resources you have available.

Storage Space

For official and up-to-date information about storage space, click here. If you want a quick overview, see below.

Long Term Storage

IDRE maintains high end storage systems (BlueArc and Panasas) for Hoffman2 disk space. There have built in redundancies and are fault tolerant. Redundant Backups are also available.

If all of that sounded Greek to you, the important thing to understand is that there is a lot of disk space on Hoffman2 and IDRE takes great pains to make sure your data is safe. If you are paranoid, there is alternative backups.

Home Directories

- When you login to Hoffman2, you get dropped into your home directory immediately. Home directory locations follow the pattern

/u/home/[u]/[username]

- Where

[u]is the first letter of the username, e.g./u/home/j/jbruin # Common home directory/u/home/t/ttrojan

- Your home directory is where you can keep your personal files (papers, correspondences, notes, etc.) and files you frequently change (source code, configuration files, job command files). It is not the place for your large datasets for computing. Data in your home directory is accessible from all login and computing nodes.

- Every user is allowed to store up to 20GB of data files in their home directory. If you are part of a cluster contributing group, you can also store data files in that group's common space described in the next section...

Group Directory

- Group directories are given to groups that purchase extended storage space (in 1TB/1million file increments for three year periods, as of Summer 2013). This is common space designed for collaboration and is where your datasets should mainly be stored. Individual users are given directories under the main group directory to help organize data ownership. For example:

/u/project/mscohen # Common group directory

/u/project/mscohen/data # Common group "data" directory, create subdirectories for specific shared projects

/u/project/mscohen/aaronab # mscohen group directory for the user aaronab, different from their /u/home/a/aaronab home directory

/u/project/mscohen/kerr # mscohen group directory for the user kerr, different from their /u/home/k/kerr home directory

- and these directories are accessible from all login and computing nodes.

- These directories have limits to how many files can be put in them and how large those files can be.

- When a group buys in for 1TB/1million files, their quota is considered met when they have EITHER

- 1TB worth of files, OR

- 1 million files

- When a group buys in for 4TB/4million files, their quota is considered met when they have EITHER

- 4TB worth of files, OR

- 4 million files

- When a group buys in for 1TB/1million files, their quota is considered met when they have EITHER

- Once a group's quota has been reached, everyone in that group is immediately prevented from creating any more files in the group directory automatically. This means any computing jobs you are running may fail due to an inability to write out their results. You may also have trouble starting GUI sessions due to an inability to create temporary files.

- Read about how to monitor your disk quota here.

Temporary Storage

When running a computing job on Hoffman2, reading and writing a bunch of files in your home directory can be slow. So faster temporary storage is available to use for ongoing jobs. Read the official description here.

scratch

- /u/scratch/[u]/[username]

- Where [username] is replaced with your Hoffman2 username and [u] is replaced with the first letter of your username. Data here is accessible on all login and computing nodes. You can use up to 2TB of space here, but Any files older than 7 days may be automatically deleted by the system. Use the UNIX environment variable

$SCRATCHto reliably access your personal scratch directory.

Cold Storage

Cold storage is the idea of storing things away for a long time. Disk space on hoffman2 can be very expensive. IDRE has a cloud archival storage service here

Univa Grid Engine

The Univa Grid Engine is the brains behind how jobs get executed on the cluster. When you request that a script be run on Hoffman2, the UGE looks at the resources you requested (how much memory, how many computing cores, how many computing hours, etc) and puts your job in a queue (a waiting line for those not familiar with British English) based on your requirements. Less demanding jobs generally get front loaded while more demanding ones must wait for adequate resources to free up. The UGE tries to schedule jobs on computing nodes in order to make the most efficient use of the resources available.

Queues

There is more than one queue on Hoffman2. Each is for a slightly different purpose:

- interactive

- For jobs requesting at most 24 hours of computing time and requiring the ability for users to interact with the program running.

- highp

- For jobs requesting at most 14 days of computing time. These are required to run on nodes owned by your group.

And there are others. Read about them here.

Find out how to submit computing jobs to the Hoffman2 Cluster.